Piotr Bielak

AI Frameworks Engineer @ Intel | Assistant Professor @ PWr

Intel Corporation

Wrocław University of Science and Technology

About me

I am a graph machine learning specialist with a recently obtained PhD, possessing over 4 years of industrial experience. My research expertise centers on graph representation learning in terms of unsupervised and self-supervised learning, yielding over 100 citations for my work. As an accomplished author of both conference and journal articles, I’ve introduced several pioneering methods in this field, such as GBT, AttrE2vec, and FILDNE. I am a dedicated Python enthusiast and a well-rounded practitioner, proficient in full-stack machine learning development, including DevOps/MLOps, as well as model implementation and evaluation.

Download my resumé.

- Graph Machine Learning

- Representation Learning

- Unsupervised Learning

- Self-supervised Learning

PhD in Computer Science (Machine Learning), 2019-2023

Wrocław University of Science and Technology

MSc in Computer Science (Data Science specialization), 2018-2019

Wrocław University of Science and Technology

BEng in Computer Science, 2014-2018

Wrocław University of Science and Technology

Publications

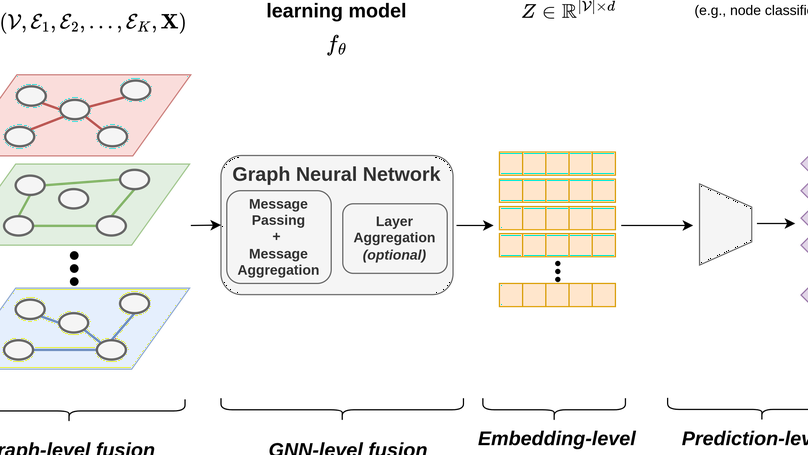

In recent years, unsupervised and self-supervised graph representation learning has gained popularity in the research community. However, most proposed methods are focused on homogeneous networks, whereas real-world graphs often contain multiple node and edge types. Multiplex graphs, a special type of heterogeneous graphs, possess richer information, provide better modeling capabilities and integrate more detailed data from potentially different sources. The diverse edge types in multiplex graphs provide more context and insights into the underlying processes of representation learning. In this paper, we tackle the problem of learning representations for nodes in multiplex networks in an unsupervised or self-supervised manner. To that end, we explore diverse information fusion schemes performed at different levels of the graph processing pipeline. The detailed analysis and experimental evaluation of various scenarios inspired us to propose improvements in how to construct GNN architectures that deal with multiplex graphs.

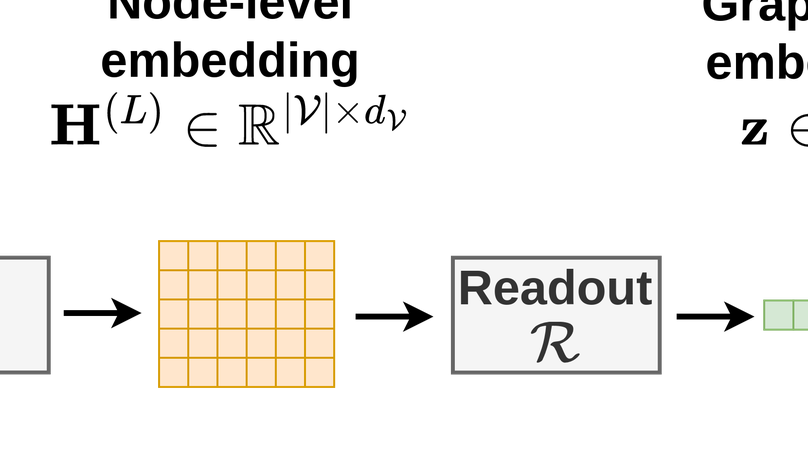

Graph machine learning models have been successfully deployed in a variety of application areas. One of the most prominent types of models - Graph Neural Networks (GNNs) - provides an elegant way of extracting expressive node-level representation vectors, which can be used to solve node-related problems, such as classifying users in a social network. However, many tasks require representations at the level of the whole graph, e.g., molecular applications. In order to convert node-level representations into a graph-level vector, a so-called readout function must be applied. In this work, we study existing readout methods, including simple non-trainable ones, as well as complex, parametrized models. We introduce a concept of ensemble-based readout functions that combine either representations or predictions. Our experiments show that such ensembles allow for better performance than simple single readouts or similar performance as the complex, parametrized ones, but at a fraction of the model complexity.

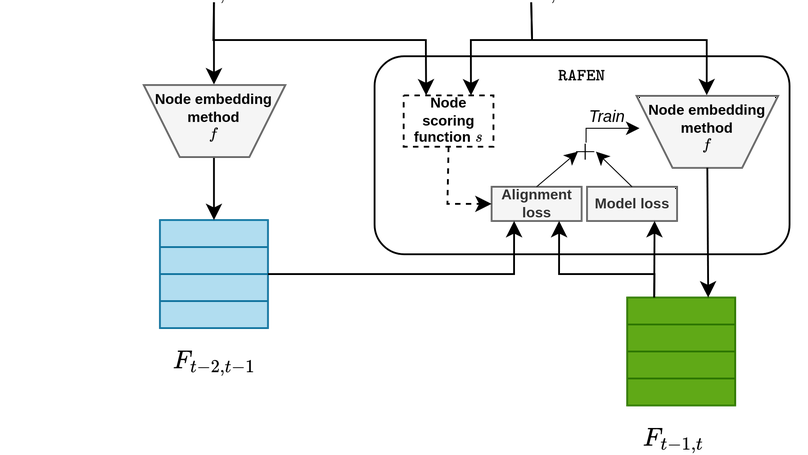

Learning representations of nodes has been a crucial area of the graph machine learning research area. A well-defined node embedding model should reflect both node features and the graph structure in the final embedding. In the case of dynamic graphs, this problem becomes even more complex as both features and structure may change over time. The embeddings of particular nodes should remain comparable during the evolution of the graph, what can be achieved by applying an alignment procedure. This step was often applied in existing works after the node embedding was already computed. In this paper, we introduce a framework – RAFEN – that allows to enrich any existing node embedding method using the aforementioned alignment term and learning aligned node embedding during training time. We propose several variants of our framework and demonstrate its performance on six real-world datasets. RAFEN achieves on-par or better performance than existing approaches without requiring additional processing steps.

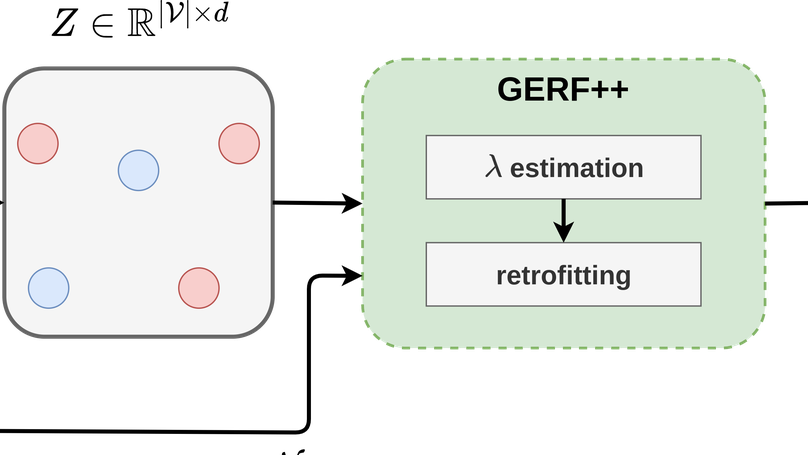

Representation learning for graphs has attracted increasing attention in recent years. In particular, this work is focused on a new problem in this realm which is learning attributed graph embeddings. The setting considers how to update existing node representations from structural graph embedding methods when some additional node attributes are given. Recently, Graph Embedding RetroFitting (GERF) was proposed to this end – a method that delivers a compound node embedding that follows both the graph structure and attribute space similarity. It uses existing structural node embeddings and retrofits them according to the neighborhood defined by the node attributes space (by optimizing the invariance loss and the attribute neighbor loss). In order to refine GERF method, we aim to include the simplification of the objective function and provide an algorithm for automatic hyperparameter estimation, whereas the experimental scenario is extended by a more robust hyperparameter search for all considered methods and a link prediction problem for evaluation of node embeddings.

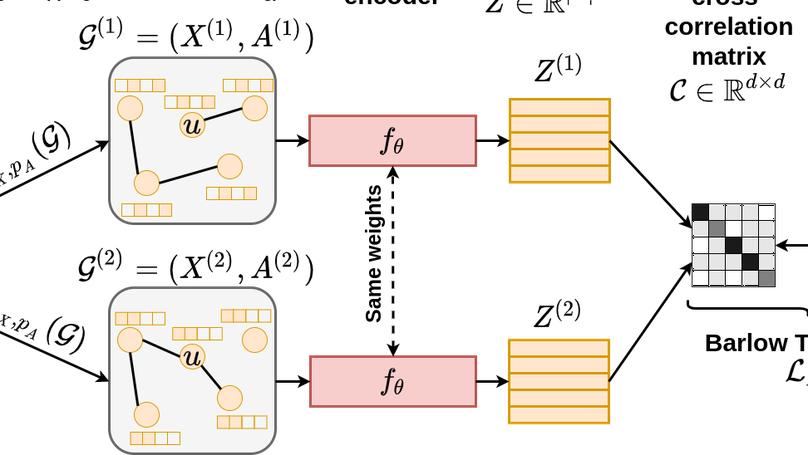

The self-supervised learning (SSL) paradigm is an essential exploration area, which tries to eliminate the need for expensive data labeling. Despite the great success of SSL methods in computer vision and natural language processing, most of them employ contrastive learning objectives that require negative samples, which are hard to define. This becomes even more challenging in the case of graphs and is a bottleneck for achieving robust representations. To overcome such limitations, we propose a framework for self-supervised graph representation learning – Graph Barlow Twins, which utilizes a cross-correlation-based loss function instead of negative samples. Moreover, it does not rely on non-symmetric neural network architectures – in contrast to state-of-the-art self-supervised graph representation learning method BGRL. We show that our method achieves as competitive results as the best self-supervised methods and fully supervised ones while requiring fewer hyperparameters and substantially shorter computation time (ca. 30 times faster than BGRL).

Experience

I am part of the AI Frameworks team, which works on the optimization and development of the Deep Graph Library (DGL).

Tech stack: Python, PyTorch, DGL, GNN

Continuing my research on Graph Machine Learning methods at the Department of Artificial Intelligence. My primary focus is on graph representation learning, complemented by expertise in self-supervised and unsupervised learning. Responsible of leading a research group dedicated to graph representation learning.

Research areas: graph representation learning, self-supervised learning Teaching: Representation Learning, Script Languages

During the research visit at the Lucy Institute for Data and Society (prof. Nitesh Chawla), two projects were undertaken: (1) building representations of neural networks based on their weights and training dynamics (weight-space models), (2) development of a novel selfsupervised graph representation learning method, founded on the Joint Embedding Predictive Architecture. Responsibilities span across the full research stack, i.e., problem definition, model implementation and experimental evaluation.

Tech stack: Python, PyTorch, DVC, Scikit-learn, Hydra, PyTorch-Geometric, GNN

Development of machine learning solutions tailored for debt collection processes. This involved the creation and deployment of predictive models, along with automation of data pipelines to enhance the efficiency and effectiveness of debt collection operations.

Tech stack: Python, PyTorch, DVC, Scikit-learn, Pandas, XGBoost

Development of machine learning-based recommendation solutions for company-company interactions. The role encompassed comprehensive responsibilities throughout the entire project pipeline, i.e., from the initial data preprocessing and feature engineering stages (text representations and graph building), through model development (GNN and recommendation) to the final deployment of these models, ensuring that the recommendations were fine-tuned for maximum effectiveness and tailored to the specific needs of a company.

Tech stack: Python, PyTorch, DVC, Pandas, Jupyter, PyTorch-Geometric, GNN, Scikit-learn, Sentence-Transformers, Docker, Google Cloud, MLFlow, Weaviate, AirFlow, Streamlit

Development of a user behavior prediction model based on clickstream data using gradient boosting trees classification. Shared responsibilities across the full pipeline, from data cleaning and feature extraction to model training and evaluation, as well as demos preparation and a production-level PoC implementation.

Tech stack: Python, PyTorch, DVC, AWS, Docker, Jupyter, XGBoost, Redis

Development of a financial transactions overdue prediction model based on a transaction graph. Contributing to various stages of the whole project pipeline, with responsibilities in data preprocessing, feature extraction, model training, and evaluation.

Tech stack: Python, PyTorch, DVC, Docker, Jupyter, FeatureTools, NumPy, GNN, XGBoost

Recently finished Ph.D. studies at the Department of Artificial Intelligence have been accompanied by research in various areas, with a primary focus on graph representation learning, complemented by expertise in self-supervised and unsupervised learning. Responsible of leading a research group dedicated to graph representation learning. Additional practical experience in didactics, including active involvement in the development of educational materials for the Artificial Intelligence master’s degree program.

Research areas: graph representation learning, self-supervised learning Teaching: Probabilistic Machine Learning, Representation Learning, Large-Scale Data Processing

In the role within the Public Cloud team, responsibilities encompassed the maintenance and development of the OpenStack cloud infrastructure. Key tasks included implementing test automation using Jenkins and Gerrit, streamlining the testing process for enhanced efficiency. The notable achievement of presenting ”From messy XML to wonderful YAML and pretty JobDSL – an in-Jenkins migration story” at the OpenStack Summit Berlin 2018 underscored the commitment to improving and innovating cloud operations.

Tech stack: Python, Bash, Openstack, Jenkins, Gerrit

The role involved dedicated efforts in the research and development of a machine learningbased resource manager designed for modern cluster schedulers. This engagement contributed to the advancement of resource allocation methodologies, leveraging machine learning techniques (state-of-the-art reinforcement learning) to optimize the efficiency and scalability of cluster management systems. Responsibilities across the entire project pipeline, including environment preparation, model implementation and result analysis.

Tech stack: Python, Tensorflow, Keras, Reinforcement Learning

Fullstack web development of an application dedicated to staff room allocation. The responsibilities encompassed active involvement in both frontend and backend aspects of the project, including the creation of interactive room maps and the development of the backend REST API. A pivotal role was played in bugfixing and the implementation of new features, thus making significant contributions to the overall application enhancement and functionality.

Tech stack: Java, Spring Boot, MongoDB, AngularJS

Automation of integration tests for a management application for logistics companies. Responsibilities encompassed various aspects, including specification analysis, defect reporting, and the creation and review of test scripts. Additionally, a secondary project was undertaken involving the development of a test script crawler and result analyzer in Python, contributing to more efficient testing processes and quality assurance.

Tech stack: Python, BeautifulSoup

Contact

- piotr.bielak@pwr.edu.pl

- Wybrzeże Stanisława Wyspiańskiego 27, Wrocław, Lower Silesia 50-370

- DM Me at Twitter

- DM Me at LinkedIn